Risk governance for achieving trustworthy AI

Fujitsu / May 17, 2023

While the development of Artificial Intelligence (AI) shows enormous potential for bringing about important advances in many areas, issues stemming from unethical use have become a source of headaches for vendors and users. Many countries and businesses have established guidelines for the appropriate use of AI technologies.

This article features key learnings from the panel that Fujitsu hosted about Trustworthy AI and looks into how those learnings can be applied to use the technology more responsively in our lives.

Legislation for trustworthy AI

Reports of problems involving camera surveillance, hiring practices, loan screening and other areas have prompted calls for greater oversight of AI usage.

Responding to these issues, governments and organizations have drafted principles and guidelines for the appropriate use of AI. In April 2021, the European Union proposed its law on artificial intelligence, AI Act, scheduled to take effect in 2025. Companies that violate the legislation will be subject to penalties.

Global Partnership on AI summit 2022

The Group of Seven countries launched the Global Partnership on AI (GPAI) in 2020. This multi-stakeholder initiative now has 29 member countries collaborating to bridge the gap between theory and practice on AI.

Japan presided the 2022 summit of member countries. Fujitsu took the opportunity to organize a panel session titled, “The ways to trustworthy AI in practice,” that featured in-depth discussions with world-class experts.

The participants exchanged ideas on what roles people and organizations involved should play, under which framework, keeping in mind that the interest in AI Ethics shifts to its practical side after the publication of AI legislation in the EU. The following insights were shared during the session:

・Policy makers, standardizers, and international organizations should develop respective frameworks.

・Policy prototyping (*1) and regulatory sandboxes (*2) are promising for evidence-based policy making and its implementation.

・The opinions of experts in standardization and application fields should impact regulations.

・AI risk governance should be achieved by having companies recognize AI as a risk and holding dialogues with stakeholders.

*1 Prototyping: A strategy where a process or procedure is built and evaluated to study its effectiveness before intensive implementation.

*2 Sandbox: A policy or program that enables innovative experiments to be performed without regulatory penalties so that negative impacts can be avoided after deployment.

(From left) Mikael Munck, CEO, 2021. AI; Dr. Martha Russell, Executive Director, mediaX at Stanford University; Dr. Ryoichi Sugimura, AIST, Chair of Japan national committee ISO/IEC JTC1/SC42; Matthias Holweg, American Standard Companies Professor of Operations Management, Saïd Business School, University of Oxford; Dr. Ramya Srinivasan, Research Manager, Fujitsu Research of America

Fujitsu’s AI Ethics Impact Assessment Technology

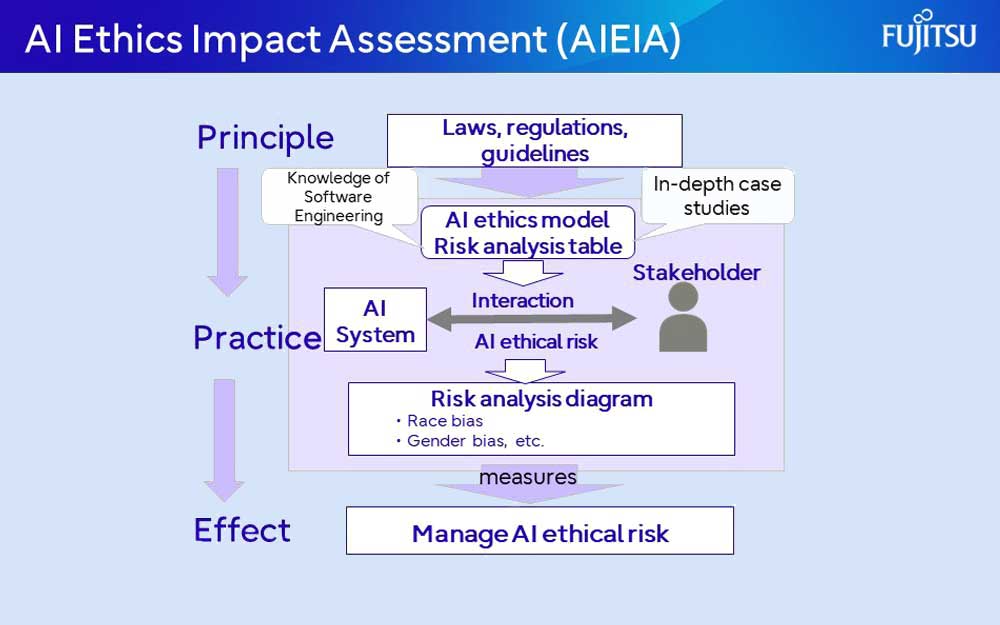

In the session, Ramya Srinivasan, a researcher with Fujitsu Research of America, presented Fujitsu’s AI Ethics Impact Assessment Technology.

Fujitsu believes it is not enough just to simply set and follow rules if a user requires evidence that AI is compliant with laws and regulation. A typical way of doing this is to check a list of action items at each stage of AI development. This is an important step in assessing the risk of infringing on laws and norms. The risks highlighted here are specific events falling under the possibility that people might use AI with malicious intent, that it might unintentionally harm society or that it is being used without being noticed of such risks.

Fujitsu’s AI Ethics Impact Assessment Technology unlocks responsible development of AI. It allows users to assess risks that could occur once the system is in operation. This technology comes with a knowledge base that indices unethical factors resulted from the past incidents with respect to ethical requirements of AI held among stakeholders and their subgroups, as well as algorithms and data used in training.

By tracing back to unethical factors causing the identified risks, the proposed technology is able to evidence compliance with laws. The results are always verifiable and reproducible because of the knowledge base inspired by software engineering approach.

Image model of the structure of AI Ethics Impact Assessment.

After the GPAI summit, participants in industry, government and academia provided feedback that the meeting offered a good opportunity for sharing information and collaborating with partners across the globe. We were also praised for our expertise in voicing our opinions about specific topics. Fujitsu has taken part in the European forum “AI4People” discussing AI ethics since its establishment, making a significant contribution to the fields of banking and finance. Feedback from the GPAI summit urged us to reaffirm that regarding AI ethics practice after the publication of the AI Act, Fujitsu should conduct a study with global cooperation and interdisciplinary approaches.

In the end, we are hopeful that our message about the importance of establishing common frameworks for AI risk governance was clearly conveyed. Japan, U.S., Europe, U.K. and Singapore are expected to lead efforts to counter AI risks, come up with specific approaches and build consensus. Fujitsu will continue to promote our AI Ethical Impact Assessment Technology so that it is recognized as a powerful tool to help with this important goal.

Related information

Editor's Picks