At this year’s G20 summit, held at the end of June, leaders from around the world assembled in Osaka. Japanese Prime Minister Shinzo Abe made the most of Japan’s opportunity as the host country and held bilateral meetings with the leaders of countries including the US, China, and Russia. Of these meetings, the one that attracted the most attention was arguably the meeting between US President Donald Trump and Chinese President Xi Jinping. As the ongoing trade war between these nations affects many Japanese companies, lots of people watched with close intent to find out how the meeting went.

Of course, there were also many other things to note from the summit. One section of the leaders’ declaration at the end of the week drew particular attention from those of us working in the technology sector.

Regarding digitization, the declaration states: "We aim to promote international policy discussions to harness the full potential of data." It continues, "The responsible development and use of Artificial Intelligence (AI) can be a driving force to help advance the SDGs and to realize a sustainable and inclusive society."

In other words, the heads of state recognized that proper use of AI is important for creating sustainable societies.

What are the "AI Principles" welcomed at the G20 summit in Osaka?

In fact, AI was the largest topic at the G20 Ministerial Meeting on Trade and Digital Economy that was held in Tsukuba, Ibaraki just before the official start of the summit. At this meeting, the G20 trade ministers and digital economy ministers gathered together for the first time. Japan took the initiative in compiling a ministerial declaration that incorporated "AI Principles" to make efforts to establish an environment for creating human-centered AI.

The premise of the AI Principles is that AI is an instrument for people, and they advise preventing unregulated development and use of AI. What’s noteworthy is that the AI Principles impose obligations of transparency and explainability on AI developers and operators. Few people correctly understand how AI works. If AI makes an undesirable judgment, the user cannot help but feel unhappy or anxious. Thus, the AI Principles require that the reasons why AI makes specific judgments be provided.

The AI Principles are mentioned specifically in the G20 Osaka leaders’ declaration, which states that the G20 welcomes the AI Principles in order to foster public trust and confidence in AI technologies and to fully realize the technology’s potential.

AI can now be seen in various aspects of daily life, and it’s certain that the technology will continue to evolve further. When AI gets involved in making more important decisions in society, its decisions should not produce unhappiness or anxiety. It’s necessary to provide evidence that AI’s judgments are in fact trustworthy, and the adoption of the AI Principles proves that this should be recognized as a universal requirement.

Put simply: AI’s judgments should not be processed inside a black box; instead, they must be transparent. This two-part article presents technologies that help to explain the reasons why AI makes specific judgments.

‘Black box’ AI makes people anxious

The ‘third AI boom’ is now underway, and its driving force is the product of deep learning technology.

During the second AI boom of the 1980s, the main technological focus was on expert systems that describe experts’ knowledge. Expert systems, which solve problems by using a wealth of expertise that has been input into them, produced some positive results. However, since they require the preparation, collation, and input of an enormous amount of expert information, they are costly and time-consuming to implement. The second boom did not last long.

By contrast, in deep learning, computers discover rules to make judgments by themselves once they have been fed a massive amount of data. Only a certain amount of data is needed, which eliminates the need to perform tedious tasks like feeding in experts’ knowledge. Thus, the third AI boom is still developing, and practical use of AI is expanding rapidly. AI is now past the phase in which it is out of reach for most enterprises; AI has reached the phase in which businesses should seriously consider how to utilize it for themselves.

However, compared to the conventional method of manually feeding information into computers to have them make judgments, deep learning performs very complex processing, which renders it difficult to find out how judgments are made. Even when an expert analyzes the judgments made by deep learning, they will often fail to determine the judgment rationale. This is what we mean when we refer to ‘black box AI’, since the path to the computer’s judgment or outcome is obscured by its apparent complexity.

A global boom in studies on ‘Explainable AI’

The G20 agreed that AI Principles should seek to resolve this problem. The ten principles defined in the Draft AI Utilization Principles, as compiled in 2018 by the Japanese Ministry of Internal Affairs and Communications, also include the principle of transparency and the principle of accountability.

Around the world, there are more and more studies aiming to make it possible to explain the judgments made by AI. The number of presentations regarding studies on this technology, known as Explainable AI (or XAI), at well-known conferences such as NeurIPS and ICML has also been increasing. IBM has been conducting a study to detect biases when deriving judgments and to visualize judgment sequences; the company has started to provide the research product as a cloud service. In addition, simMachines, a US-based startup, has been conducting a study to utilize algorithms that quantify the similarity of data objects to each other.

Fujitsu also has been working actively on XAI by making efforts to solve the problem of black box AI with three major technologies. These are known respectively as Deep Tensor™, which learns graph-structured data and identifies inference factors; Knowledge Graph, which describes relationships among pieces of information; and Wide Learning™, which handles learning models that reveal how judgments are made.

Wide Learning™

Deep Tensor™ and Wide Learning™ are machine learning technologies that constitute the core of AI, whereas Knowledge Graph is a support technology. All work together effectively in combination. Compared to the other deep learning techniques described previously, Wide Learning™ takes a completely different approach to making judgments as AI. Part 2 of this article will provide a detailed explanation of Wide Learning™. Here, we’ll explain how the combination of Deep Tensor™ and Knowledge Graph renders AI’s judgments explainable.

Deep Tensor™ and Knowledge Graph extend deep learning

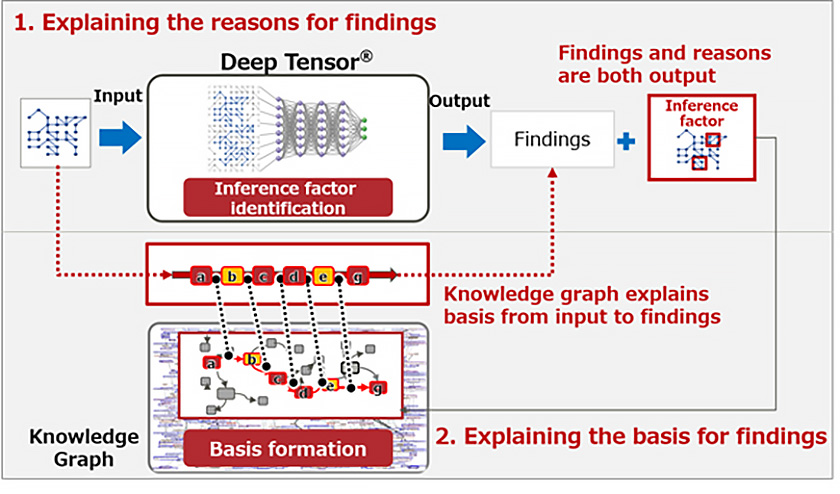

Deep Tensor™ is an advancement of deep learning technology. Its most prominent characteristic is its ability to identify a derived judgment and the inference factors that contributed to this judgment. Specifically, it converts graph-structured data objects used when expressing relationships (that is, connections between people and objects) and uses a mathematical technique known as tensor decomposition while simultaneously carrying out deep learning training. In addition, it identifies multiple factors that have influenced the findings by searching and ‘reverse engineering’ the deep learning outputs.

On the other hand, Knowledge Graph is a database populated based on the meanings of a massive amount of data and the relevance of peripheral knowledge. For example, by collecting an enormous amount of information from the Web and academic papers and then generating a Knowledge Graph, a body of knowledge can be turned into a database. The resulting database is then used in combination with Deep Tensor™.

By associating the factors identified by Deep Tensor™ that have significantly influenced findings with data objects on Knowledge Graph, information relevant to the individual factors can be extracted as bases. In other words, by connecting factors identified by Deep Tensor™ with specific pieces of information on Knowledge Graph, people can explain the reason and basis of the findings produced by the deep learning process.

Adoption of this technology has already begun in the financial sector. Consider the determination of credit risk when making an investment in or providing a loan to a company. Generally, credit risk determination involves the person in charge examining financial statements and performance data submitted by the company in question. However, with respect to investments and loans to be made in and to small- and medium-sized enterprises, there have been problems such as the failure to submit financial statements and a lack of credibility in the submitted financial statements.

This is where Deep Tensor™ and Knowledge Graph come in. AI enables credit risk determination by processing the history of actual transactions between a financial institution and a company without relying on financial statements.

The history of transactions has a graph structure and thus can be analyzed by Deep Tensor™, which determines credit risks and identifies characteristic factors that have contributed to the findings. Associating these with a Knowledge Graph built atop the financial institution’s information makes it possible to explain the reasons behind specific decisions and occurrences. If there are no problems in terms of credit risk, the financial institution can make an investment or provide a loan with peace of mind. And even if the financial institution decides decline the investment or loan request, they can provide clear reasons for doing so.

Going forward, we will see more cases in which we leave judgments up to AI, but we will inevitably doubt these judgments on occasion. In such a moment, if the AI can explain how it reached its judgment, we may come to understand and be satisfied with the result.In Part 2, we’ll explore Wide Learning™, the other XAI technology that Fujitsu has developed and how it fits in with this wider drive towards human-centric AI.

Author Information

Tomofumi Kimura

Senior Research Officer

Nikkei BP Intelligence Group

Mr. Kimura joined Nikkei BP after graduating from the Department of Mechanical Engineering, Keio University, where he received a master’s degree in 1990. He covered and wrote articles mainly on cutting-edge processing technologies and production management systems for Nikkei Mechanical, a manufacturing industry magazine. Afterwards, he was involved in the publication of the first issue of Nikkei Digital Engineering, a magazine on utilizing computer techniques in the manufacturing industry, such as CAD/CAM and SCM. In January 2008, he assumed the position of chief editor of the Tech-On! website (the present Nikkei xTECH). In July 2012, he assumed the position of chief editor of Nikkei Business and its digital edition, Nikkei Business Digital (the present Nikkei Business Electronic Edition). He designed business-related content and led website operation and app development. In January 2019, he organized and established a project for Nikkei Business Electronic Edition. He has served in his present post since April 2019. He is in charge of content consulting services for leading enterprises and designing content for websites such as Beyond Health.